Introduction to Local AI Models in Opera

Your personal data will stay private when you choose a locally installed AI model.

Upgrading to a New AI Source

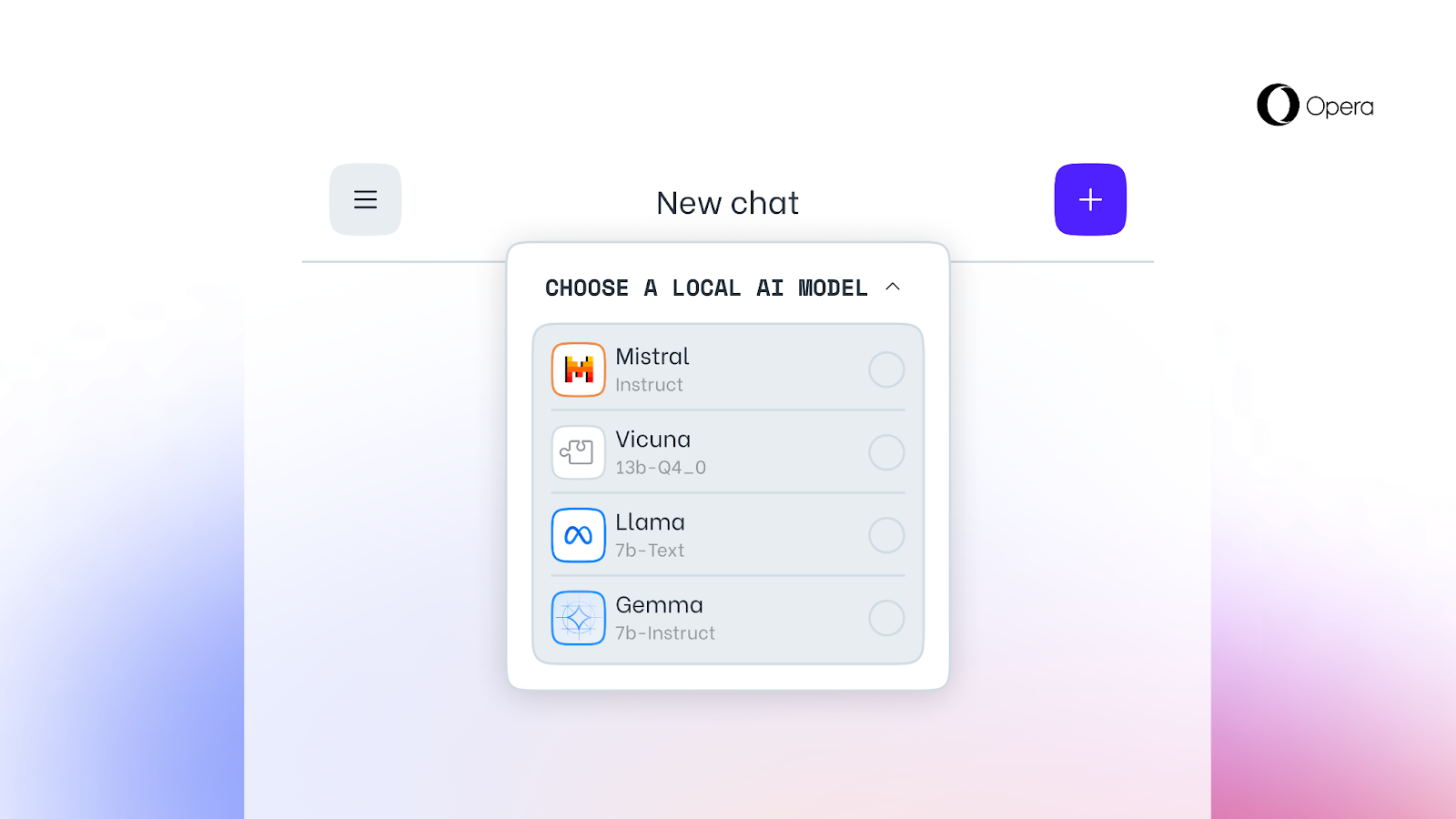

Opera, the makers of the popular indie browser, are launching a new upgrade to change your AI’s source. Unlike typical artificial intelligence systems that rely on a server-based large language model (LLM), Opera’s new browser will support LLMs that are hosted locally.

Benefits of Downloading Local LLMs

Opera has announced the introduction of “experimental support” for about 150 local LLM versions across 50 AI application families, including LLama by Meta and Gemma by Google. Initially, this support will be available only to developers. They can use these features to select their preferred LLM for testing AI inputs.

Developers will need to download the chosen AI model to their computers to utilize these features and then integrate it into their applications. It’s unclear how this will look when made available to non-developers.

Potential for Wider Use

Should the testing phase prove successful, you may soon be able to choose your in-browser AI assistant—either Opera’s Aria AI service or one of the many available local AIs. This choice could significantly enhance the security of your data shared with the AI.

“Using Local Large Language Models means users’ data stays on their device, enabling the use of generative AI without transmitting information to a server,” Opera explained in a press release. Clearly, keeping your data local is a safer option, isn’t it?